Source: Screenshot of Perusall's landing page by Markus Stauff

Teaching with Perusall

Jan Teurlings and Markus Stauff on the (dis-)advantages of tool-based, collaborative reading

In the opening blog post to the special series Researching, Teaching, and Learning with Digital Tools, Josephine Diecke, Nicole Braida, and Isadora Campregher Paiva have invited readers to explore the wide-ranging applications of digital methods and tools in film and media studies. Based on the areas of teaching and (self-)study, research, (interactive) websites as well as project management and project communication, specific use cases with their advantages and limitations will be presented and critically examined in the course of the series. The aim is to provide an open platform for discussing the various approaches and perspectives in order to focus on the dimensions of and workflows with digital tools.

The special series continues with a contribution by media scholars Jan Teurlings and Markus Stauff, who share their experiences with the collaborative reading tool Perusall for online teaching. Starting with the tool's potential and promises for teachers and students, the authors discuss the extent to which these come to fruition in practice and why they are only partially suitable for use in media studies.

Introduction

For two years we have been using the collective annotation tool Perusall in our jointly taught MA course Cross-Media Infrastructures at the University of Amsterdam (UvA). All readings of the course are uploaded to the tool (which is integrated in our university’s default online learning environment, Canvas) and students are asked to annotate the texts before the seminar meetings.

At the UvA one of Perusall’s founders, Harvard University Professor of Physics, Eric Mazur, was invited to present the tool as part of the university’s broader endeavor to move teaching more to «blended learning» and «flipped classrooms» – didactic strategies that favor online tools for «knowledge transmission» so that the classroom time can be used to focus on questions, debate, and the application of knowledge.

«Every student prepared for every class»

On its website, Perusall boasts: «Every student prepared for every class.» The promise of a more careful engagement with the texts before seminar meetings was indeed our main motivation for using the tool. Because of a certain ambivalence towards the stacking of ever more teaching tools that often get replaced by a seemingly more powerful alternative after two years, we made participation voluntary (with the proverbial carrot of earning students an extra 0,5 on their final grade if they used the tool consistently). The majority of students participated, and we indeed noted improved in-class discussions.

So in terms of engagement with the course readings, the tool clearly aids in stimulating it. This could also be achieved with more old-fashioned means however, like obligatory reading reports. Thus, one also needs to take into consideration what type of engagement the tool encourages, and here our experiences are decidedly mixed. Within the tool, students are required to add a certain number of questions and annotations within each text. The default is six or seven annotations per text, but this, like some other parameters, can be specified by the teacher. The process is made a «collective» one by allowing students to respond to (or simply upvote) each other’s annotations.

Perusall’s unique selling proposition is that the students’ input is algorithmically graded. This is supposed to both stimulate non-superficial and reflexive forms of engagement – an important concern when it comes to the implementation of «blended» or «flipped» teaching strategies – and promises to keep the workload for lecturers to a minimum (which, as we will show below, remains largely illusory). Here it is important to mention that Perusall has become a commercial business by now, but it explicitly avoids external investments, allows for the deletion of all courses and personal data anytime, and according to the Terms of Service and Privacy Policy does not seem to sell any data at the moment. The claim that all decisions are made «in the interests of students, instructors, and educators everywhere» seems credible.

Locked-in Reading

Still, the algorithm shapes the mode of engagement. It transparently tells students when they open the tool that their contributions will get evaluated based on their length (i.e. short contributions like «I don’t understand this» are negatively assessed), their distribution across the document, as well as the overall time spent on reading the article. Especially this last criterium tends to make Perusall both the default and the supposedly superior way of reading academic texts: Time spent on offline readings is «lost» on the algorithmic grading and the idea that the collective annotation deepens the learning process partly disqualifies other modes or reading. Our university’s Perusall contact person even recommended that we make the readings exclusively available through the tool and delete all other copies from the online learning environment. We did not do this because (being media scholars) we appreciate materially diverse ways of reading and annotating texts. Not least for in-class discussions, it is helpful if students have their idiosyncratically annotated versions of a text at hand. According to Perusall, the push notifications to students reminding them to go back to the text are based on research showing that iterative reading deepens understanding, but many students experienced this as stressful; we only found out in the second year using the tool, that this can at least partly be switched off. As is often the case, it is surprisingly difficult for lecturers to get a full sense of how the tool looks and works from the students’ side.

Double Reading

Since the algorithm penalizes reading outside of the tool, students are actually reading two texts at the same time: the primary text as well as the commentary posted previously by other students. As a result, the primary text is not engaged with on its own terms, but rather through the looking glass of the previous commentary. This does not necessarily lead to detrimental results, especially if the previous discussions are of high quality, but it does lead to a form of double reading in which the reading of the primary text is immediately joined with the commentary. Especially for denser or more abstract texts, the juggling of two layers of meaning (text and paratext) might distract more than it improves understanding. It also tends to encourage interpretations that are narrower in scope: instead of bringing together many divergent views that were forged in private, with Perusall the primary reading is already social, and hence more constrained and shaped by the group. This tendency to socially discipline interpretations is exacerbated by the fact that the tool allows students to upvote each other’s contributions, which activates the mechanism of social approval. Students with a more solid background in the discipline are less affected by this, as they have the necessary knowledge to situate the readings. We cannot offer empirical proof here, but our impression was that students with less self-confidence struggle even more to develop and trust their own reading of a text. Additionally, we learned from students that the collective annotation made more sense to them when, due to Corona, there were no offline seminar meetings and the annotations gave them some compensatory sense of sociality. Like so many other digital teaching tools, the pandemic was clearly adding to the persuasiveness of Perusall.

Community of Practice?

A more benevolent interpretation could argue that by reading and commenting socially, a community of practice comes into being. With this term, Etienne Wenger stressed that learning is a social process, and he distinguishes between three interrelated dimensions: mutual engagement, joint enterprise and shared repertoire.1 It is clear that those three dimensions are at play in Perusall, but with one important caveat: whereas a community of practice envelopes the entire learning process, Perusall only operates at a very tiny fragment of the learning cycle, namely the reading of literature. Whereas admittedly a lot of research work in the humanities involves reading literature, it cannot be reduced to it. And, given the importance of engaging carefully but generously with a text (i.e. close reading), perhaps a too early socialization of interpretation has more costs than benefits.

Commenting for the Algorithm

Finally – and unsurprisingly – we noted a tendency that students «write for the algorithm», meaning that they produce the type of commentary that they know the algorithm will score highly. When we discussed and evaluated the tool in class, students reported that they had looked up how the tool worked and that they had used this knowledge when writing their commentary. Initially, we were inclined to follow Deng Xiao-Ping in that it does not matter if the cat is black or white, as long as it catches mice. But after giving it more thought and discussing it with the students, it became clear that this does come with its’ drawbacks, because it instils a calculative reason into students’ attitudes towards the course literature. The latter were purely seen as a canvas on which to pin down six topics interesting enough to allow for a smart annotation or well-formulated question. The cost of this is a degradation not so much of the quality of the commentary, but of the attitude with which students approach intellectual work. Especially the requirement of six annotations per text was experienced as a straightjacket by many students who wanted more autonomy in deciding on which texts to spend more (or less) time.

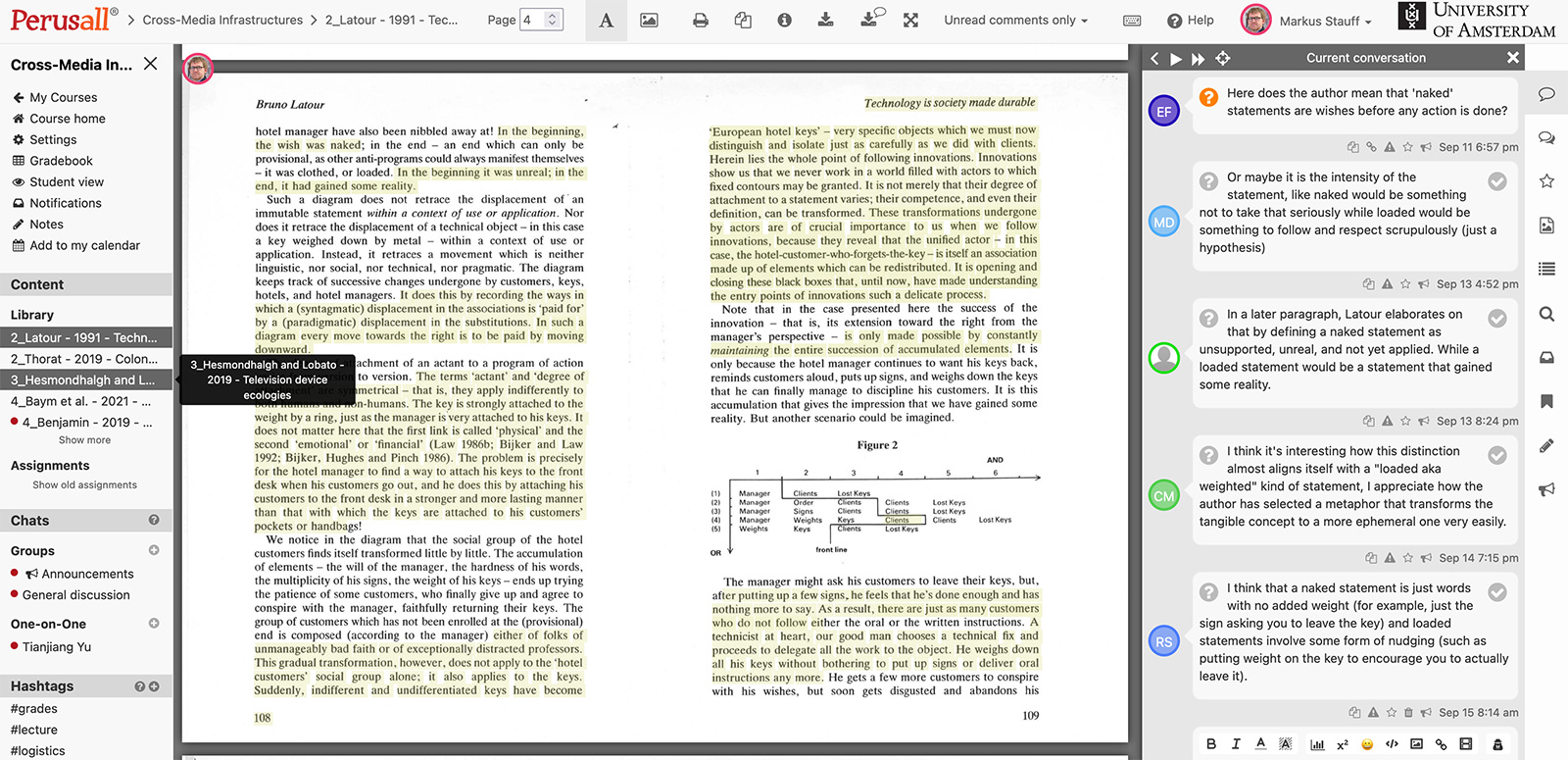

Figure 2: Annotation abundance – each highlight typically has overlapping comments

Discussing the Algorithm

More generally, our students were suspicious of algorithmic grading and voiced concerns. When we randomly checked some of the grading, we found it pretty in line with our own assessment and only obviously sloppy work earned students a fail. Nevertheless, the critical attitude towards algorithms was very welcome in a course on media infrastructures for at least two reasons. First, it provoked a debate about algorithms in general and about specific parameters in particular. Since Perusall’s interface allows for some adjustments (e.g. the relative weighing of reading time, number of upvotes, number and quality of annotations), the combination of transparent and opaque algorithmic layers can be easily addressed. Second, the comparison between algorithmic and « human » grading (which increasingly tends to become partly algorithmicized by rubrics, grading schemes etc.) allows for a more reflexive approach towards all kind of grading and their uneven mix of trust and transparency. Here it is the combination of not too heavy consequences (voluntary participation) with a not yet too elaborated algorithm (the results of which can still be compared to human judgment), that allows the application to offer teaching moments.

Dealing with Annotational Abundance

If until now we have focused on how the tool works for students in their learning process, for the final section, we want to discuss the tool from the viewpoint of lecturers. As mentioned previously: the biggest advantage is that it encourages students’ preparatory reading work, and it is quite effective in doing so. Being able to assume that students have read the texts allows for better in-class discussions and a more focused preparation of the class – especially since the students’ annotations offer a clearer idea of which parts of the texts they find especially difficult or insightful.

There are, however, also some drawbacks. Depending on the group size, going through the comments becomes a day’s task in itself. If 60 students each post 6 comments, it is clear that going through them is time-consuming. The interface includes some filter options and offers alternative ways of navigating through the text with all the annotations – but it is still clumsy and slow when moving between individual conversations between students and all annotations on a particular paragraph or sentence (see figure 2).

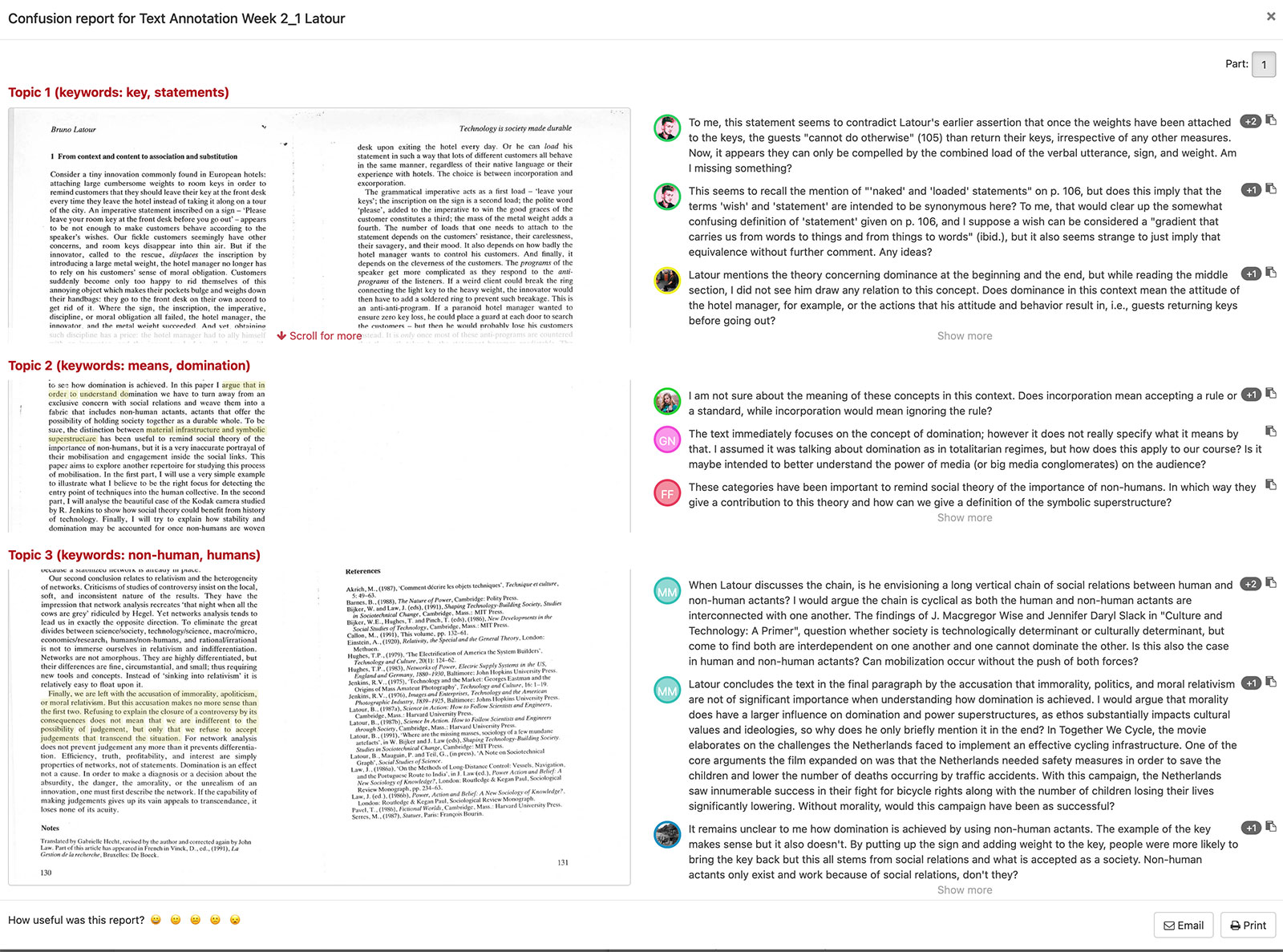

In line with the «solutionism» of many teaching tools,2 Perusall of course offers a technological fix for this annotational abundance through a «confusion report» (see figure 3), which is at heart an algorithmically curated list of the major topics of the students’ annotations augmented with a selection of individual comments. While the comments listed here are always worth a more in-depth discussion, and thus can be taken as starting points for in-class discussion, they in no way represent major topics of the text – or even of the students’ struggles with the text. We suspect that this might work better in the context of natural and/or technical sciences (as indicated by its founders' backgrounds). Like many teaching tools and the majority of didactic research, it was not developed and surely not optimized for the type of critical reading that is key for the humanities. The term « confusion report » is revealing in this context, implying the existence of one clear, correct answer.

Figure 3: Confusion report

Workload

Despite Perusall’s automated grading, it does not decrease the workload for the lecturers. Not least, the tool creates expectations: a lot of students post relevant and important questions, or just basic questions of understanding. Since in our context, these questions do not necessarily organize around some basic insight but about historical or terminological details of the argumentation, it is difficult to answer all of them. Ideally, and didactically helpful, the lecturer would – as is expected of students – visit the tool recurrently in the days before the meeting to clarify some basic questions and help debates to avoid misinterpretations. We did this selectively and it seems to contribute to the students’ trust in the tool; but it is not realistic to do this week after week. An alternative strategy is to only look into the most up-voted comments and not read the remaining ones, but this also created irritations with the students, because it left good questions regularly unaddressed. In future use, we plan to at least explicitly ask in class which questions posed on Perusall are still unanswered.

Heatmap

Finally, another ambivalent feature is the heat map that shows when students were busy with the texts. Not surprisingly, even the knowledge about the algorithms’ criteria does not prevent most students from doing their reading just before the deadline. On the other hand: Why would we want to know? As with all datafied and visualized knowledge it is surprisingly difficult to completely neglect its «evidence» and to avoid the pressure (or at least the tool’s capability) to «fix» this.

We think that if used in one – but not all classes – of a BA or MA program, the tool can offer an interesting addition. At least for the majority of students, it seems to increase engagement with the text, and for lecturers, it offers starting points for the organization of in-class debate. As such it provides a welcome variation to the way we work with academic texts.

Bevorzugte Zitationsweise

Die Open-Access-Veröffentlichung erfolgt unter der Creative Commons-Lizenz CC BY-SA 4.0 DE.