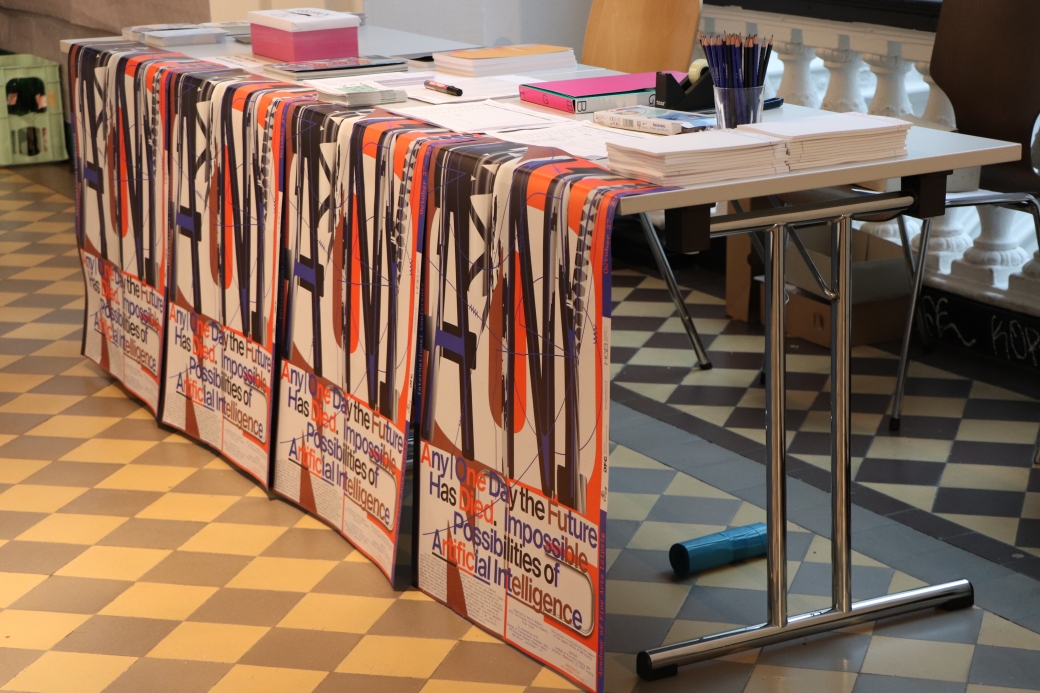

Any | One Day the Future Has Died. Impossible Possibilities of Artificial Intelligence

A Conference Report

The international conference Any | One Day the Future Has Died. Impossible Possibilities of Artificial Intelligence was held from October 27 to 29, 2022 at the Academy of Fine Arts Leipzig. The organizers, Katrin Köppert, Nelly Y. Pinkrah, Francesca Schmidt and Pinar Tuzcu, invited academics from different fields as well as artists, poets and other specialists to discuss Artificial Intelligence and its impact on the future and present. The aim of this conference was to understand whether AI can be effectively used in today's digitized society, despite its controversial applications and uses. And by ‹effectively,› I mean working towards mostly social, anti-capitalist, and anti-consumerist goals, such as enabling people from different economic groups and backgrounds to cope with everyday challenges and have equal access to information and knowledge. The conference aimed to gain a broader and more nuanced understanding of AI as a socio-cultural concept. As a participant, I had the opportunity to attend a variety of talks and workshops, as well as the art exhibition, curated by Bob Jones, on a wide range of AI-related topics.

Most talks were organized as panels with several speakers connected by a common sphere of interest, such as the possibilities of decolonizing AI, the apocalyptic (or not) future of AI, and the ecological impact of using AI. Speakers had time to present their ideas and thoughts, and then to discuss the themes with each other and with the audience. In this review, I will highlight some of the key themes and takeaways from the conference, as well as provide my overall impression of the event. Among all the discussed questions I would like to mention the most thought provoking for me and discuss them a little bit from my point of view.

Opening, Photo: Anna Sophie Knobloch

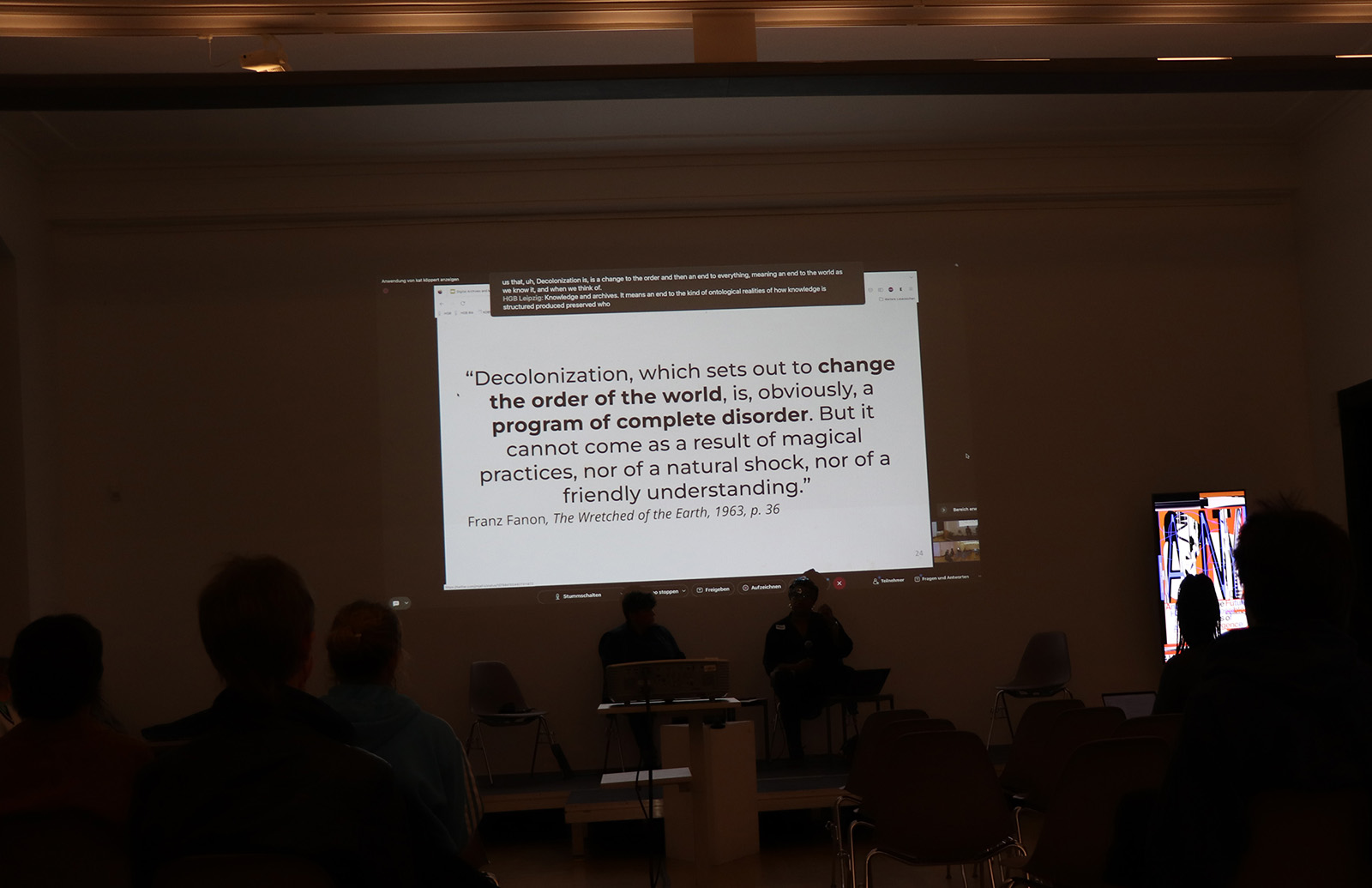

The most broad but also essential question for me sounded like this: «How to create an ethical AI and is it possible to make sure that the AI decisions are fair and unbiased?» Most of the experts presented their understanding of AI development from a pessimistic point of view, which – considering the title of the conference – was also the main vector of the «death of the Future»-concept. Although AI has both positive and negative sides, I acknowledge that the pessimistic approach has more potential to create the drive for actual positive changes and therefore I really appreciated it. Especially in the field of prediction and planning the future events. This idea of prediction and planning is central to the development and use of Artificial Intelligence systems. AI systems can be designed to analyze data and make predictions about future events or outcomes as about the future itself based on past patterns and trends. They can be particularly efficient for prediction and planning in situations where there is a large amount of data available and where the relationships between different variables are complex and not easily understood by humans. These predictions can then be used to inform planning and decision-making processes. However, as Kelly Foster from Whose Knowledge organization, one of the speakers, discussed, data politics appear to be very biased against marginalized communities, not properly registering and storing information about them. Which inevitably leads to the AI system bias against the same groups and repetition of these patterns and trends that enforced the discrimination policies in the first place. And while decolonization processes are focusing on semantic and aesthetics aspects it is important to work on the ontological change of the data archives.

Kelly Foster and Francesca Schmidt, Photo: Ehab Essadi

So is it actually possible to make sure that the AI decisions are fair and unbiased? Speakers of the conference were referencing different aspects of misuse of AI abilities which lead us as a community to the new codes of exclusion. In the panel DeColoniality of AI Sara Morais dos Santos Bruss highlighted one of the most important aspects of AI development. She argued that images of AI are used for representation of ‹pure› logic and data analysis, and she emphasized that representing AI as a disembodied concept leads to ignoring the biases of AI and the fact that the absence of diversity among the AI developers directly leads to its drastic negative impact on the future.

Depicting AI as a purely logical and rational entity can prevent us from holding its developers accountable because it suggests that the AI is not capable of making mistakes or acting in a biased or unethical manner. This can create the impression that the developers are not responsible for the actions or outcomes of the AI, and that any negative consequences are simply the result of AI's own ‹logical› decision-making. However, it is important to recognize that AI is developed and programmed by humans, and as such, it can reflect the biases and limitations of its creators. By acknowledging this, we can hold the developers of AI accountable for its actions and outcomes.

To work towards to the reduction of discrimination in AI means to not only use diverse and representative data for learning, addressing knowledge gaps and marginalized data archives but to also implement fair algorithms that can involve using techniques such as fair classification and fair regression to mitigate the impact of biases in the data. However, it is only possible through acknowledging AI as an embodied entity and engaging a diverse group of stakeholders in the development and deployment of AI systems to ensure that the perspectives and needs of a wide range of people are taken into account. It is important to note that nowadays most of the social groups are underrepresented or not at all represented in the field of AI development. Therefore, limiting factors such as race, ethnicity, age, gender and socio-economic status can migrate from this sphere to the spheres of AI implementation.

One speaker of the Decolonial Weavings, Vernacular Algorithms panel, Radhika Gajjala, shifted the focus to the basic understanding of AI. She led us to the next important question: «How exposure to the data influences the body of an AI and of a human?» She drew the metaphorical connection between working with data and the practice of weaving, which I find very accurate in the aspect of complex contextuality. Gajjala highlighted the importance of big data sets and how the data is collected and, most importantly, interpreted. As an example she talked about #hijab on Twitter and how this hashtag leads to completely different themes and perspectives: from historical events to modern discussions about hijab as a form of oppression or a form of cultural identity. Vernacular practices of Twitter (practices that refer to the traditional and local customs, behaviors, and ways of life that are specific to a particular culture or community) includes immediate reaction based interactions, indirect referencing, speaking to the void, using euphemisms. These aspects make analyzing data extremely complicated for AI systems. That is why using this kind of data on сontroversial or sensitive topics needs strong moderation and interpretation that takes in consideration changing context. But at the same time the amount of data needed to be interpreted and mapped for AI is enormous and impossible for humans to grasp. The act of weaving as a symbol of community and connection in this context is a metaphor for the way in which these various elements are brought together and interwoven to create complex meaning.

Radhika Gajjala, Photo: Ehab Assadi

Another important aspect Radhika Gajjala talked about is the pace of time and the significant changes in temporality from human perspective because of technology usage. In some cases, the pace of everyday life may feel faster due to the increasing demands and pressures placed on people, such as the need to juggle work, family, and social commitments. The availability of technology, such as smartphones and the internet, can also make it easier for people to stay connected and be expected to respond to messages and requests at all times, contributing to a faster pace of life. Gajjala asks if the technology is speeding us up or is it the exposure to the enormous amount of information and inability to react and interpret all of it. In my opinion, the feeling of being overwhelmed or out of control can contribute to anxiety and depression. When the pace of life is increased, people may feel like they are unable to keep up or that they do not have enough time to handle all of their responsibilities.

Tung-Hui Hu, Photo: Ehab Assadi, edited by Katrin Köppert

This topic of the speeding pace of life and body reaction to this change of pace has been covered by Tung-Hui Hu during the panel AI – Between Apocalypse, Lethargy, and Wonder. He argued that as digital technologies have become increasingly prevalent in everyday life, they have contributed to a sense of «digital lethargy» or a feeling of being overwhelmed and disconnected from the world. The constant availability and pressure to be connected through digital technology can have a negative impact on mental health, contributing to feelings of anxiety and loneliness. Hu explores the ways in which digital technology has transformed our relationship to time, space, and our own bodies, and how these changes have had both positive (increased connectivity, greater access to information, improved efficiency with automation, etc.) and negative impacts on society. Among negative aspects are disconnection from the physical world, leading to a sense of alienation; loss of privacy due to data being a new form of capital; disruption of social relationships by creating a constant need for attention; increased polarization (technology can contribute to the polarization of society by allowing people to seek out and consume information that confirms their existing beliefs and biases). Among positive aspects, on the other hand, lies understanding of digital fatigue as a new form of protest against exploitation of data and generic economy of attention as extreme capitalist practices.

In conclusion, I would like to say that I had a very positive impression of the conference. The keynote speakers were highly engaging and provided thought-provoking ideas and concerns on the future of AI and its impact on society. I would also like to highlight the high level of discussions between experts that sometimes were even more engaging.

One of the standout features of the conference, that I find extremely important, was the diversity of attendees. There were attendees from a wide range of countries, backgrounds, and industries, which made for a rich and lively exchange of ideas and helped to avoid the one-point ‹corporate› perspective that is still very common for technology connected events.

Speakers managed to shift my way of understanding AI technologies towards a more conscious and cautious approach. It is clear that the field of AI is rapidly evolving and that there is much more to be learned, explored and changed. I am grateful for the opportunity to have attended this conference and look forward to following the future.

Bevorzugte Zitationsweise

Die Open-Access-Veröffentlichung erfolgt unter der Creative Commons-Lizenz CC BY-SA 4.0 DE.