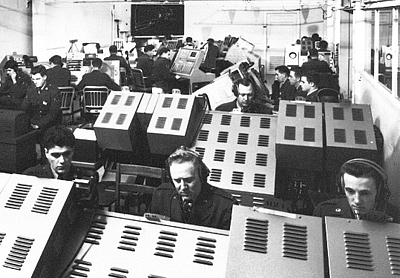

Prototyp eines Monitorraums zur Steuerung von Abfang-Operationen, SAGE-Leitungszentrum im Barta Building des Massachusetts Institute of Technology, 1953. Mit freundlicher Genehmigung der MITRE Corporation.

Of Humble Origins

The Practice Roots of Interactive and Collaborative Computing

A slightly shorter version of this text was translated and published in Zeitschrift für Medienwissenschaft, No. 12, 2015.

The shorter German translation deals with the roots of interactive computing; the longer version deals with both interactive and collaborative computing.

The digital stored-program computer was developed as a technology for automating the large-scale calculation work that since the time of the French Revolution had been performed cooperatively, in an advanced form of division of labor («human computers»). This led to the construction of digital electronic computing, which was little more than large-scale calculators. The development work involved various scientists, for whom the new devices were scientific equipment of essential importance for their own work (cryptology, weapons design), as well as various engineers in a supporting role. Technologically, this turned out to be a dead-end road, of which little survived, apart from batch-computing such as payroll calculation, tax calculation, compiling, and of course certain scientific calculations.

What today, typically, is conceived of a «computing», namely, «personal computing», initially developed as a technology for facilitating large-scale cooperative work activities (initially air defense, later air traffic control and airline reservations) in order to deal with the problem that had become too complex to be performed by conventional means of the coordination of cooperative work, manual or mechanical. The technology of interactive computing subsequently branched out in all directions, ranging from interactive human-computer systems such as workstations, laptop computers, and smartphones, to «embedded» computing devices for the purpose of controlling machinery such as machining stations, car engines, and washing machines, in which the computing device «interacts» with mechanical or other environmental entities.

Important paradigms of interactive computing applications were developed in ways that have remarkable similarities: they were built by practitioners as practical techniques for their own use or for the use of their colleagues, and later generalized.

1. The practice roots of computing technologies

Unlike nuclear energy or GPS navigation, computing technologies did not originate from a specific body of scientific theory.1 Nor did they, like radio communication, develop in step with the development of a body of scientific theory. To be sure, their development have depended critically upon a host of mathematical theories (Boolean algebra, Shannon’s information theory, recursive function theory, etc.), but they were not the result of the application of any specific body of theory. As pointed out by the eminent historian of computing Michael Mahoney,2 computer science has taken

«the form more of a family of loosely related research agendas than of a coherent general theory validated by empirical results. So far, no one mathematical model had proved adequate to the diversity of computing, and the different models were not related in any effective way. What mathematics one used depended on what questions one was asking, and for some questions no mathematics could account in theory for what computing was accomplishing in practice.»3

That is, «the computer» was not, strictly speaking, «invented». As Mahoney puts it, «whereas other technologies may be said to have a nature of their own and thus to exercise some agency in their design, the computer has no such nature. Or, rather, its nature is protean».4 According to Mahoney, there therefore was a time, a rather long time, when the question «What is a computer, or what should it be?», had «no clear-cut answer», and the computer and computing thus only acquired «their modern shape» in the course of an open-ended process that has lasted decades.5 And there is no reason why one should assume that the concept of «computing» has solidified and stabilized: the jury is still out, as the immense malleability of «the computer» is being explored in all thinkable directions. In other words, it is confused to conceive of «the computer» as one technology. It is a protean technology, and how it develops is determined by its practical applications in a far more radical sense than any other technology.

Computing technologies arose out of the coordinative issues involved in the organization of cooperative work in the modern industrial civilization. With the development of industrial society arose, for example, a massive need for calculation such as the production of astronomical tables for purposes of maritime navigation. Such tables were of the utmost importance, since any inaccuracies could lead to loss of ship, men, and cargo. Other forms of mass calculation, such as logarithmic tables, gained similar importance in all branches of industry, commerce, and warfare. Beginning in the wake of the French Revolution, engineers and managers such as Gaspard de Prony began to organize large-scale calculation work as a cooperative effort on the model of the advanced division of labor characteristic of the early industrial form of organization that had evolved in the «manufactories»6. As the industrial civilization, with its evermore intensive social division of labor, unfolded, calculations on an increasingly large scale became essential: accounting and payroll calculations in industrial enterprises, masse calculations in insurance and financial institutes, etc. eventually required armies of «human computers»7, clerical staff specialized in performing highly routinized calculation work organized on the model of industrial division of labor.

But by the middle of the 20th century, with the immense calculation work required by industrialized warfare, the development of new forms of weaponry such as nuclear weapons etc., the overhead cost of coordinating the cooperative mass-calculation work performed by «human computers» began to exceed the benefit of this form of organization. Even with desktop calculators and punched-card tabulators, coordination costs were becoming forbidding. The model was no longer viable. «By the 1940s, […] the scale of some of the calculations required by physicists and engineers had become so great that the work could not easily be done with desktop computing machine. The need to develop high-speed large-scale computing machinery was pressing.»8 This was the overriding motivation for the development of the first electronic digital computers. As Alan Turing put it in a report written towards the end of 1945:

«Instead of repeatedly using labour for taking material out of the machine and putting it back at the appropriate moment all this will have to be looked after by the machine itself. […] Once the human brake is removed the increase in speed is enormous.»9

Thus, the early electronic computers were little more than large and very fast calculators that, when configured, could perform in one run an entire calculation task that previously would have involved a large number of highly specialized human computers. When this technology was adopted for commercial use in the late 1950s, it was still used for batch processing, the only important difference being that business applications such as payroll calculation involved the processing of vast amounts of data. Again, just as the use of computers for the automation of mass calculation, in administrative settings stored-program computing was used as a substitution technology; that is, the new computers were designed for automating the work of the central computing departments of large-scale organizations, replacing entire batteries of punched-card tabulators by a single computer such as the IBM 1401. Thus, most of the computer systems installed in commerce and government during the 1950s and 1960s were «simply the electronic equivalents of the punched-card accounting machines they replaced»10. That is, in spite of claims to «universality», its actual design and use was, as Turing had put it, strictly limited to eliminating «the human brake».

2. The practice roots of interactive computing

Parallel to the development of the technologies of automatic calculation aimed at overcoming the «human brake», an entirely different computing technology was being developed that did not aim at automation but rather at a paradigm of computing in which the computing machine assisted workers in performing and coordinating their work. The prototype of this new computing paradigm became know as Whirlwind.11

The motivation for this development effort was quite different from the mundane problems of coordinating mass-calculation work. In August 1949, the Soviet Union exploded a nuclear bomb, and since it also possessed bomber aircraft capable of delivering such weapons at targets in the US, US authorities realized that the existing air defense system was quite inadequate for the situation that had arisen. The problem was basically that the coordination effort of the existing system was of severely limited capacity. As Judy O’Neill explains the conundrum:

«In the existing system, known simply as the «manual system», an operator watched a radar screen and estimated the altitude, speed, and direction of aircraft picked up by scanners. The operator checked the tracked plane against known flight paths and other information. If it could not by identified, the operator guided interceptors into attack position. When the plane moved out of the range of an operator’s radar screen, there were only a few moments in which to «hand-over» or transfer the direction of the air battle to the appropriate operator in another sector.»12

This cooperative work organization, the «manual system», did not scale up to meet the challenge posed by large numbers of aircraft with intercontinental reach and carrying nuclear weapons. A radical transformation of the organization of the air-defense organization and its technical infrastructure was seen as a matter of urgency. The solution that was eventually chosen was to construct a new air defense system. Eventually named Semi-Automatic Ground Environment, or SAGE, the system was divided into 23 centers distributed throughout the USA. Each of these centers would be responsible for monitoring the airspace of the sector and, if required, for directing and coordinating military response. The work of each center was to be supported by a high-speed electronic digital processing machine that would receive and process data from radar sites via a system of hundreds of leased telephone circuits, and, to fulfill its purpose, it would do so in «real time», that is, in step with the frequency of state changes in the environment. In other words, what was demanded was a computing technology that was entirely different from the large-scale calculation machines that were being developed at the time. The task of developing it was assigned in 1950 to a team at MIT’s Servomechanism Laboratory led by Jay W. Forrester that had already been developing a digital flight simulator and thus had solid experience with the issues of real-time computing.13

The stored-program paradigm was adopted as the foundation of development work in the Whirlwind project,14 but the Whirlwind computer made radically different use of the idea of the stored program. Whereas EDVAC and other stored-program computers were designed for automatic number-crunching in batch-mode, that is, without any interaction with the environment (operators included) until the job reached its prespecified end-state, Whirlwind was designed to process data in ongoing interaction with the environment (radar data, operator instructions, target tracking, etc.).

A major technical issue in developing Whirlwind was the speed and reliability of computer memory. Consequently, significant effort was devoted to developing the new technology of magnetic core memory, based on a web of tiny magnetic ceramic rings. Core memory technology, which did not become operational until the summer of 1953, made Whirlwind «by far the fastest computer in the world and also the most reliable» 15. It was a «monumental achievement»16. In addition, also in order to increase operational speed, the architecture of the underlying electric circuitry was designed to offer parallel processing or «synchronous parallel logic» «that is, the transmitting of electronic pulses, or digits, simultaneously within the computer rather than sequentially, while maintaining logical coherence and control. This feature accelerated enormously […] the speeds with which the computer could process its information.»17

Whirlwind was also «first and far ahead in its visual display facilities» which, among other things, facilitated the «plotting of computed results on airspace maps»18. Complementary to this feature was a «light gun» with which the operator could select objects and write on the display: «As a consequence of these two features, direct and simultaneous man-machine interaction became feasible»19.

Whirlwind and its offspring, the Cape Cod and SAGE computers, inaugurated and defined a new technological paradigm, typically referred to as computerized real-time transaction processing systems.20 This is a technology that facilitates workers in a cooperative effort in as much as the system provides them with a common field of work in the form of a data set or other type of digital representation (possibly coupled to facilities outside of the digital realm) and thus enables them to cooperate by changing the state of the data set in some strictly confined way:

«On-line transaction processing systems were programmed to allow input to be entered at terminals, the central processor to do the calculation, and the output displayed back at the terminal in a relatively short period of time. […] The services that could be requested were restricted to those already pre-programmed into the system.»21

The operators at the various centers and on the national scale were engaged in cooperative work in that their individual activities were coupled with those of their colleagues in an through the SAGE system and its network of radar stations, communication lines, tracking devices, handovers procedures. The SAGE computer was designed to assist workers in the highly routinized work of processing — in real time — the vast masses of transactions generated by this network. Given that, its support of cooperative work and associated coordinative practices was quite rudimentary.

But in view of the contingent nature of the domain (the inherently uncertainty of the state of affairs in the sky, the often dubious reliability of radar data and transmission lines, etc.) coupled with the extremely safety-critical nature of the work (namely, preventing nuclear attack), support of workers’ ability to improvise had been a design requirement from the very beginning of the Whirlwind project. The SAGE system was not designed to eliminate the «human brake» but to enable cooperating operators to perform a task that could no longer be coordinated manually. From the beginning, that is, the SAGE system was planned as «semi-automatic». Thus, the Cape Cod prototype version and the ultimate SAGE computer systems were deliberately designed for interactive computing, complete with real-time computing, graphical CRT displays, handheld selection devices (light guns, joysticks), direct manipulation.

While a crucial step in the development of interactive computing, Whirlwind and its family offered only very few degrees of freedom, to use an engineering term. But again, the limits of the Whirlwind exemplar of this paradigm were evident from the very beginning. It was, for example, obvious that there would be situations where air-defense operators would need to interact with colleagues, perhaps at another center, in ways different from «those already preprogrammed into the system». Thus, in the summer of 1953, a «man-to-man» telephone system was installed so as to enable operators at different locations to simply talk to one another.22 That is, the computational system did not support operators in enacting or developing coordinative practices, and contingencies had to be handled outside of the computational system, as ordinary conversations among operators within each center or as telephone conversations among operators across centers.

This solution was of course merely a hack — namely, a hack that indicated that when it comes to facilitating cooperative work the online transaction processing paradigm suffers from severe limitations. Even after its conception, computing power was excessively expensive. Thus, the interactive computing paradigm almost vanished from sight outside of the special domains of online mass-transaction processing such as air traffic control and airline reservation, in which the costs of this kind of computer equipment could be justified. Because of their cost, it was mandatory that computer systems were operating close to full capacity. Consequently, most of the few computers that were around in the 1960s were running in a batch-processing mode, one job after another, or, as it was aptly expressed by J. C. R. Licklider, the «conventional computer-center mode of operation» was «patterned after the neighborhood dry cleaner (‹in by ten, out by five›)»23

3. The apprenticeship of interactive computing

The paradigm of which Whirlwind was the exemplar did not die, however. Whirlwind did leave a legacy in the form of a «culture» of interactive computing, typically vaguely expressed in notions such as «man-computer symbiosis»24, «human intellect» «augmentation system»25, and «man-machine system»26, that survived in the particular community of practice that had developed the paradigm in the first place.

First of all, there was a direct personal continuity: «The people who worked on the two projects gained an appreciation for how people could interact with a computer. This appreciation helped the development and maturing of interactive computing, linked with time-sharing and networking»27. More than that, the Whirlwind project and the subsequent development of SAGE were research and development projects on a huge scale. The project therefore had tremendous impact on American computer science and engineering communities. In fact, half of the trained programming labor force of the US at the time (1959) was occupied with the development of the software for the SAGE system.28

Secondly, when the initial Whirlwind project was finished in 1953, the original Whirlwind prototype was not scrapped but remained available on the MIT campus. Furthermore, as part of the further development of SAGE transistorized experimental computers based on the Whirlwind architecture were developed. These computers were also handed over to MIT campus in 1958 and were used intensively by graduate students and researchers: «The attitude of the people using the Whirlwind computer and these test computers was important in the establishment of a ‹culture› of interactive computing in which computers were to be partners with people in creative thinking»29. For example, Fernando Corbató, who later played a central role in the development of time-sharing, recalls that «many of us [at MIT] had cut our teeth on Whirlwind. Whirlwind was a machine that was like a big personal computer, in some ways, although there was a certain amount of efficiency batching and things. We had displays on them. We had typewriters, and one kind of knew what it meant to interact with a computer, and one still remembered.»30

Thirdly, while the rather oppressive economic regime Licklider described so vividly («in by ten, out by five») could be tolerated in administrative operations such as payroll and invoice processing that had been organized on batch-processing principles ever since the introduction of punched-card tabulating earlier in the century, this regime effectively precluded all those applications that require high-frequency or real-time interactions between user and the digital representations. In particular, the «in by ten, out by five» regime made programming, especially debugging, a deadening affair. This gave ordinary computer technicians a strong motive for devising alternative modes of operation. As described by O’Neill, researchers at MIT, who had «cut their teeth» on Whirlwind and had experienced interactive computing first-hand, «were unwilling to accept the batch method of computer use for their work» and «sought ways to continue using computers interactively». However, «it would be too costly to provide each user with his or her own computer»31. So, around 1960 the idea of letting a central computer system service several users «simultaneously» was hatched. In the words of John McCarthy, one of the fathers of the idea, the solution was an «operating system» that would give «each user continuous access to the machine» and permit each user «to behave as though he were in sole control of a computer»32. The first running operating system of this kind seems to have been the Compatible Time-Sharing System or CTSS. It was built at MIT by a team headed by Fernando Corbató and was first demonstrated in 196133. The various users were connected to the «host» computer via terminals and each would have access to the computing power of the «host» as if he or she was the only user.

Fourthly, and in hindsight more important, conceptually at least, was the tenacious research effort of Douglas Engelbart to advance the interactive computing paradigm with a view to «augmenting» the «human intellect» by means of computing technology. 34 A conspicuous step in this work was reached in 1968 when Engelbart and his colleagues could demonstrate that many of the technologies that we now consider essential components of interactive computing (direct manipulation, bit-mapped displays, mouse, message handling, etc.) could be realized in an integrated fashion on the basis of an architecture that provided unrestrained access to multiple application programs.35

The protracted and enormously expensive research effort that went into building the Whirlwind and its offspring provided the basic principles and techniques of the interactive computing paradigm. But, confined as the paradigm was to computing laboratories and to the memories and aspirations of veterans of Whirlwind and SAGE, the continuity of the paradigm was a delicate one. It took a generation for the technologies of interactive computing to become a practical reality for workers outside of a narrow cohort of computer scientists and the corps of workers in certain time-critical work domains such as air defense and airline reservation. What impeded the further development of interactive computing, beyond on-line transaction processing? The short answer is that the basic principles of interactive computing could not be disseminated beyond the few areas where their application was economically sustainable. In the case of interactive computing, what impeded its further development after around 1960 was the fact that computing equipment was enormously expensive until the development of especially microprocessors in the 1970s made mass production of computers economically viable.36

In addition to the «staggering problems» encountered in the production of transistors, the application of the technology in computing was impeded by another bottleneck problem, an impasse called the «tyranny of numbers». Circuits consisted of discrete components (transistors, resistors, capacitors, etc.) that had to be handled individually. In the words of Jack Morton, a manager semiconductor research at Bell Labs, «Each element must be made, tested, packed, shipped, unpacked, retested, and interconnected one-at-a-time to produce the whole system». To engineers it was evident that this meant that the failure rate of circuitry would increase exponentially with increased complexity. Thus, so long as large systems had to be built by connecting «individual discrete components», this posed a «numbers barrier for future advances»37.

But by the end of the 1960s, as the integrated circuit technology matured and prices fell, small computer manufacturers such as Digital Equipment and Data General adopted the integrated circuit as the basis of compact «minicomputers» that could be sold for less than $100,000.

In a very similar way, the microprocessor was not developed for the computer industry at all, but was conceived as a means of reducing the cost and time required to design circuitry for appliances such as electronic calculators. In 1969, engineers at Intel suggested to offset the growing demand for circuitry engineers by making a programmable computer chip instead of one hard-wired calculator after another. The first «micro-programmable computer on a chip», the Intel 4004, was eventually released at the end of 1971, about 35 years after the discovery of the transistor and almost 30 years after the conception of interactive computing was first realized in Whirlwind. By the mid-seventies the microprocessor was an established and accepted technology complete with computer-aided design environments: «In no more than five years a whole industry had changed its emphasis from the manufacture of dedicated integrated circuits [for pocket calculators, etc.], to the manufacture of what in effect were very small computers»38.

Underlying this almost tectonic shift was a «favorable constellation» of factors in the semiconductor industry. Firstly, the invention of electronic calculators and their success had created a mass market for integrated circuits. Advances in solid-state physics in general and semiconductor manufacturing technologies in particular made it possible to produce integrated circuits with increasing densities and low power consumption. Secondly, related technical developments made it possible to produce high-density memory chips. Thirdly, minicomputer technology had matured to the stage that their hardware architectures could serve as a model for microprocessor design. That is, the microprocessor technology, initially developed for desktop calculators, was shifted laterally and formed a critical component technology in the personal computer that was then created.

4. Interactive computing becomes practical reality

When Engelbart undertook his experimental work in the second part of the 1960s, integrated circuits had been in their infancy. The computer platform that was available to his laboratory for its experiments consisted of a couple of minicomputers, but in 1967, the laboratory was able to acquire its first time-sharing computer; it cost more than $500,000.39 However as shown above, in the beginning of the 1970s, the availability of increasingly powerful integrated circuits offered a radical change in the constituent technologies of computing. Thus, in the beginning of the 1970s interactive computing was at last able to surge forward again. The first step in this was taken in 1972 when technicians at Xerox’ Palo Alto Research Center (PARC), working across the road from Engelbart’s lab at the Stanford Research Institute and inspired by his work, began building what they called a «personal computer», the Xerox Alto.40 Released in 1974, only seven years after Engelbart had spent $500,000 on a time-sharing-enabled computer, the Alto had a performance comparable to that of a minicomputer but the cost had dwindled to about $12,000. This was still well above what was commercially viable, but the Alto was anyway primarily developed as an experimental platform. Eventually 1,500 machines were built and used by researchers at Xerox and at a few universities.41

The vision of «personal computing» underlying the Alto design was clearly stated: «By ‹personal computer› we mean a non-shared system containing sufficient processing power, storage, and input-output capability to satisfy the computational needs of a single user»42. In accordance with this vision, the Alto in its design was primarily aimed at «office work»:

«The major applications envisioned for the Alto were interactive text editing for document and program preparation, support for the program development process, experimenting with real-time animation and music generation, and operation of a number of experimental office information systems. The hardware design was strongly affected by this view of the applications. The design is biased toward interaction with the user, and away from significant numerical processing.»43

After a lengthy process of experimentation and ongoing design, the Alto was followed by the Xerox 8010 Star which was released in 1981.44

«Alto served as a valuable prototype for Star. Over a thousand Altos were eventually built, and Alto users have had several thousand work-years of experience with them over a period of eight years, making Alto perhaps the largest prototyping effort in history. There were dozens of experimental programs written for the Alto by members of the Xerox Palo Alto Research Center. Without the creative ideas of the authors of those systems, Star in its present form would have been impossible.»45

It is worth making a note of this: «perhaps the largest prototyping effort in history», encompassing «several thousand work-years of experience» with more than a thousand Altos running «dozens of experimental programs». Interactive computing certainly did not simply drop out of the sky. And then, less than ten years after the release of the Alto, Apple could release the first Macintosh at a unit price of about $1,200, only one tenth of the cost of the Alto a decade earlier. An economically viable platform for interactive computing was now a practical reality.

The long gestation period of interactive computing technologies, from the Whirlwind generation to the Xerox generation of interactive computing paradigms, was not due to theoretical shortcomings. The technologies of interactive computing were never derived from any preexisting theoretical knowledge in the first place. No mathematical theory were in existence to provide the basis for, or reason about, interactive computing (and none has come into existence later). Nor was interactive computing an application of psychological theory. As pointed out by the eminent HCI research John Carroll.46, «some of the most seminal and momentous user interface design work of the last 25 years» (such as Sutherland’s Sketchpad and Engelbart’s NLS in the 1960s) «made no explicit use of psychology at all», and «the original direct manipulation interfaces» as represented by the Xerox Alto (1973) and the Xerox Star (1981) «owed little or nothing to scientific deduction, since in fact the impact went the other way round»47

More than that, the principles of «direct manipulation» were only articulated and systematized a decade after the release of the Alto (by Shneiderman48 and Hutchins, Hollan, and Norman49). HCI research developed as a post festum systematization effort devoted to understanding the principles of the interactive computing techniques that had emerged over three decades, from the Whirlwind to the Alto, the Star, and the Macintosh.

In fact, the second generation technologies of interactive computing were developed by computer technicians to satisfy requirements they themselves had formulated on the basis of principles and concepts known from their own daily work practices. On one hand, in the design of the Alto and the Star the technicians built on and generalized concepts that were deeply familiar to any Western adult working in an office environment. In their own words, «the Star world is organized in terms of objects that have properties and upon which actions are performed. A few examples of objects in Star are text characters, text paragraphs, graphic lines, graphic illustrations, mathematical summation signs, mathematical formulas, and icons»50. On the other hand, in interpreting these everyday concepts technically the designers applied the conceptual apparatus of objected-oriented programming (objects, classes of objects, properties, messages) that had been developed by Kristen Nygaard and Ole-Johan Dahl as a technique to obtain «quasi-parallel processing»51 and had later been further developed in the 1960s by Alan Kay and others in the form of Smalltalk:

«Every object has properties. Properties of text characters include type style, size, face, and posture (e.g., bold, italic). Properties of paragraphs include indentation, leading, and alignment. Properties of graphic lines include thickness and structure (e.g., solid, dashed, dotted). Properties of document icons include name, size, creator, and creation date. So the properties of an object depend on the type of the object.»52

Similarly, the technicians could build on «fundamental computer science concepts» concerning the manipulation of data structures in order to provide application-independent or «generic» commands that would give a user the ability to master multiple applications and to «move» data between applications:

«Star has a few commands that can be used throughout the system: MOVE, COPY, DELETE, SHOW PROPERTIES, COPY PROPERTIES, AGAIN, UNDO, and HELP. Each performs the same way regardless of the type of object selected. Thus we call them generic commands. […] These commands are more basic than the ones in other computer systems. They strip away extraneous application specific semantics to get at the underlying principles. Star’s generic commands are derived from fundamental computer science concepts because they also underlie operations in programming languages.»53

The important point here is that the design concepts reflected the technicians’ own practical experience, in that they could generalize the concepts of their own work (typographical primitives such as characters, paragraphs, type styles, etc.) and from similar concepts already generalized in computer science (MOVE, COPY, DELETE, etc.). Concepts like «character», «paragraph», «line», and «illustration», or «COPY and PASTE», etc. were a part of their own practices, as integral as pen and paper. For a long stretch, then, interactive computing technologies could be further developed and refined by technicians building tools for their own use, based on their daily practices and in a rather intuitive iterative design and evaluation process. The members of the Macintosh design team were similarly motivated: «we were our own ideal customers, designing something that we wanted for ourselves more than anything else»54. They knew the concept of interactive computing from Engelbart’s work (who had been building on the Whirlwind experience); they knew from their own lives what they would require of its design; and they had the advanced technical skills to realize their ideas.

5. The practice roots of interactive application programs

What is often forgotten (also in human-computer interaction research) is that «users» (apart from computer technicians, of course) do not interact with «computers», not even on the interactive computing paradigm. Practitioners rather use computational artifacts to interact with the objects and processes of their work, or digital representations thereof, and on the interactive computing paradigm they are able to do so in a fluent way, i.e., «in real time», in synch with the rhythm of their work activities. That is, for interactive computing to be of practical value and the computational artifact to become integral to the practice in question, the artifact must allow practitioners to express and manipulate their field of work in the native categories (object and functional primitives, etc.) of the work domain in question.

On the Whirlwind paradigm, computing was devised to make it possible for operators to work in terms of categories such as «target», «track», «flight level», etc. On the Xerox paradigm, on the other hand, computing was devised to make it possible work in terms of elemental graphical objects of literate sign systems (text, numerals, geometric objects) and the various aggregations in which these primitives may be combined. In contrast to Whirlwind, the Alto was conceived on the model of the «division of labor» introduced in time-sharing computing, that is, with a specialized program, an «operating system», enabling the user to execute a suite of specialized application programs in arbitrary combinations. Accordingly, the primitives were conceived of as resources, not for the user but for application programs. Although application programs were essential to assess the validity of the concept of «personal computing», what Xerox PARC was trying to build was not primarily a suite of application programs but a platform for users to be able execute a suite of application programs in an interactive manner.

The paradigmatic examples of interactive application programs such as word processors, spreadsheets, desktop-publishing programs, and computer-aided design have been subjected to remarkably little systematic investigation.55 The presumptions seems to be that these programs, conceptually at least, were merely applications of the principles of «direct manipulation» which was made accessible to practitioners by making interface elements visually «familiar» by means of design «metaphors».56 However, the application programs that made interactive computing practically useful for ordinary workers were — again — developed in interrelationship with the work practices in question and consequently incorporated their different logics.

The paradigmatic «killer-app», the VisiCalc spreadsheet, which was released for the Apple II computer in 1979, was developed by Dan Bricklin, a business school student with a background in software engineering, in collaboration with a friend of his, Bob Frankston, a computer programmer. The design story has become computing folklore — much of which is not supported by any evidence.57

As a software engineer with a background in developing word processing applications for minicomputers, Bricklin obviously found IT intolerably tedious having to use the paper-based worksheet when doing his assignments at Harvard. His brilliant idea was to take as his model the tabular format of the worksheet, its grid layout with prespecified arithmetic relationships between cells. As he explains in an «oral history» interview conducted in 2004:

«What I did with VisiCalc is – I sort of put it all together where I had the input, the calculations and the output all happening at the same time. And my vision was of that. It’s like word processing where basically you’re working on the actual output, so you’re expressing yourself into the output. […] I had to name them, so I was trying to figure out – how do you name cell values [for use in the calculation definition] in a way that’s going work. […]. So then finally I came up with: why not use a grid? […] So that was the basic idea of having the grid so as to be able to name it, much like a map. Of course I was used to using grids because that’s what we did in the numeric stuff we were doing […]. The columns and rows were just to make it easier to name them.»58

Taking the worksheet as a model, Bricklin and Frankston built VisiCalc on the paradigm of a key artifact in accounting practices that had been developed and used for more than a century and which, in turn, was a specialization of the tabular format that goes back to Sumerian accounting some 4,500 years ago.59 The remarkable accomplishment of the two young men in 1978 was to transform the received artifact, along with the associated formatting conventions, into an interactive computational artifact, as a result of which the electronic worksheet or spreadsheet could be immediately appropriated into the practices of accountants and similar numerate professionals.

In the electronic spreadsheet, the received artifact, the worksheet, served as more than a «metaphor». The visual «familiarity» was more than skin deep. The affordances of the tabular format of the worksheet is that it arithmetically is an arrangement of interrelated numbers that are ordered spatially (in rows and columns) according to norms of categorization inherent in the practice of accounting or budgeting or similar. By virtue of these affordances, the worksheet offers a synoptic view of the problem space, ordered according to the central concerns of the practice in question. The problem with the conventional worksheet, however, was that any change required tedious manual recalculation and updating. What the electronic version of the worksheet offered was to remove the numbing manual task of recalculation and updating, while retaining the affordances of the tabular format as developed over centuries.

The second of the paradigmatic «killer app», PageMaker, was released by Aldus in July 1985. PageMaker was developed by a professional team for an already mature application software market. Still, the design of PageMaker and, with that, desktop publishing (DTP), was devised by a seasoned newspaper man who for more than a decade had worked as production manager in the newspaper industry, that is, the work of page layout and putting together a publication from a production perspective. As he puts it himself, the «whole idea of page layout was near and dear to my heart, because I had done it the hard way with exacto [X-Acto] knives and razorblades and wax on the back of cold type [i.e., typesetting (as photocomposition) without the casting of metal]»60. After years of working as production manager, in which capacity Brainerd had also been involved in introducing electronic text processing in newspaper production, he joined the computer text-processing industry and, after another few years, founded Aldus in 1984 where he headed the development of PageMaker (in coordination with Apple and Adobe). The functional specifications for what became PageMaker was written by Brainerd in collaboration with the engineers.

The key artifact of page layout work practices, was the master (or «dummy») page, with its margin and column guides and gridlines, headers and footers, sections, and so on. It served to standardize page layout across pages and even publications and was therefore an indispensable instrument in the effort to make publications surveyable and appealing. Given the master page, most of the effort in layout work based on traditional phototypesetting consisted in pasting-up the text and illustrations into the prepared master layout «the hard way with exacto knives and razorblades and wax on the back of cold type», as Brainerd puts it, and the overwhelming concern in this work was to ensure, very meticulously, that all items were spaced and aligned. And again, any correction required another round of the tedious paste-up work. PageMaker retained the key artifact of layout work (and associated tools) in which the principles of ordering and operational procedures of layout work and the concomitant conceptual schemes were reflected. By enabling paste-up to be done on a digital representation of the page, it took much of the drudgery out of the making changes to the layout. Again, the key artifacts of the work practice were transformed, along with the associated principles of proper layout, into an interactive computational artifact.

Similarly, but at a different level altogether, computer-aided design (CAD) was to a large extent developed in the close interrelationship with the practical concerns of the aerospace and automotive industries from Ford to Lockheed, from Dassault to Renault.61 Even as late as 1980, with CAD systems based on dedicated minicomputers, a «seat» for one engineer would cost $125,000. It furthermore had to be installed in a special air-conditioned room and on top of that basic operator training took many weeks. Because of this, a work organization quite similar to the one that existed in mass-calculation work by «human computers» assisted by desktop calculators, with its «human barrier» and «in by ten, out by five» regime, was the norm:

«Because these systems were relatively expensive they tended to be run on what is typically referred to as a «closed shop» basis. The systems were typically operated by individuals who spent full time working at the graphics consoles. Engineers and designers would bring work to the «CAD Department» and then come back hours or days later to received plotted output which they would carefully check. Marked up drawings would be returned to the CAD operators who would revise the drawings and return them once again to the requestor. It was a rare situation where an engineer was either allowed to use a system for interactive creative design work or sit with an operator and have that person directly respond to suggestions. The costly nature of these systems often resulted two, or even three shift, operation.»62

Only with the establishment of the personal computer platform in the 1980s (first as dedicated «engineering workstations», later as CAD software on ordinary PCs), what happened in the development of spreadsheets and DTP application programs now also occurred in the development of CAD. In traditional engineering work (and in architectural work too), the use of «tracing paper» played a key role. The technique simply consisted in fastening a translucent sheet on top of the drawing and then, very carefully, retracing the drawing, line by line, with a pen or pencil.63 Although the process was exceedingly labor-intensive, even after blueprint copying became common, the use of tracing paper (and later polyester film) remained a key feature of engineering practices: as late as 1980, it was «the cutting edge for production of architectural drawings»64. The reasons for this lie in the logic of engineering and architectural design practices.

In the words of Charles Eastman,65 modern design practices in engineering and architecture «rely on multiple representations that each partially describe the elements making up a composition». This is a major source of the complexity of these practices:

«Designing manually or by computer consists of defining elements and composing them in the multiple dimensions of their interaction — geometric, structural, electrical, acoustic, etc.— using varied representations. These different representations are defined incrementally over time. New elements are added to existing descriptions, in order to depict the additional performances in which the designer is interested. [Design] involves creating information in one representation, then transferring it to others, until the composition satisfies diverse criteria that are evaluated in the different representations. […] Across all representations, the composition must be represented consistently. […]. A change in any one part of the design must be propagated to both higher and lower levels of detail.»66

The layer technique became a key feature of design work practices because it provided a means of handling this complexity. First of all, it enabled engineers and architects to make changes to a design, perhaps tentative ones, without having to redraw the entire drawing. Second, it allowed the individual designer to separate different aspects of the design of complex constructions, both conceptually and practically. Third, it provided a ordered framework enabling multiple designers to work in a distributed and yet ordered manner, each working on his or her elements as represented in separate layers, while all at the same time remain able to relate their partial work to the work of the others. And fourth, by making it straightforward to produce a large variety of copies by making different combinations of the layers, this layered separation also provided a relatively simple technique for coordination with the different professions involved in the design and construction or fabrication process.

As in the case of spreadsheets or desktop publishing, CAD did not alter the logic of the existing practice. It rather made it possible to engage in design of increasing complexity. First, of course, with CAD, the cost of making changes to drawings was reduced. And with CAD, the layer technique became far more manageable.67 As a result, CAD plans may now easily consist of more than 100 layers,68 which in turn has required standards for managing layer structures with respect to, for example, naming, drawing notations, etc.69

It is finally noteworthy that the layer technique was shifted laterally from architecture and engineering to other domains of drafting work (cf., e.g., Adobe Illustrator and Photoshop) as well as desktop publishing (cf., e.g., Adobe InDesign and Acrobat) and thus adopted by practitioners in professions that previously may not have used these techniques. In sum, important paradigms of interactive computing applications were developed in ways that have remarkable similarities, built by practitioners, as practical techniques, for their own use or for the use of their colleagues, and later generalized.

6. The practice roots of collaborative computing

The notion of computer-mediated communications began with the notion of «time-sharing» operating systems that matured around 1960. The users of these computers were typically engaged in cooperative work. Some were engaged in developing operating systems or other large-scale software projects and were, as a vital aspect of this, engaged in various forms of discourse with colleagues within the same project team and research institution, that is, with colleagues already connected to the same central computer system. Likewise, software technicians would need to coordinate with system operators about possibly lost files to be retrieved, about eagerly-awaited print jobs in the queue, etc. The time-sharing operating system they were building or using provided a potential solution to this need, and the idea of using the system to transfer text messages from one worker to another did not require excessive technical imagination. As one of the designers of one of the first email systems recalls:

«[CTSS] allowed multiple users to log into the [IBM] 7094 from remote dial-in terminals[] and to store files online on disk. This new ability encouraged users to share information in new ways. When CTSS users wanted to pass messages to each other, they sometimes created files with names like TO TOM and put them in «common file» directories, e.g. M1416 CMFL03. The recipient could log into CTSS later, from any terminal, and look for the file, and print it out if it was there.»70

A proper mail program, «a general facility that let any user send text messages to any other, with any content» was written for CTSS by Tom Van Vleck and Noel Morris in the summer of 1965 71. It allowed one programmer to post a message to other individual programmers, provided one knew the project they worked on, or to everybody on the same project. The message was not strictly speaking «sent»; it was appended to a file called MAILBOX in the recipient’s home directory. The same year Van Vleck and Morris also devised a primitive form of «instant messaging»72.

The scope of the exchange of messages with these and similar programs was limited by the boundary of the hierarchy comprising the local host computer system and the terminals connected to it. Messages could not travel beyond the event horizon of this black hole. This world of isolated systems dissolved with the development of network computing.

The official motivation driving the development of network computing in the shape of, in particular ARPANET, was (again) not to develop facilities for human interaction, not to mention cooperative work, but to utilize scarce resources in a more economical way. For Licklider, who also initially headed the development of ARPANET, the motivation for the network was to reduce «the cost of the gigantic memories and the sophisticated programs». When connected to a network, the cost of such shared resources could be «divided by the number of users»73. This vision was spelled out by Lawrence Roberts, who took over as manager of the development of ARPANET in 1966. Noting that the motivation for past attempts at computer networks had been «either load sharing or interpersonal message handling», he pointed to «three other more important reasons for computer networks […], at least with respect to scientific computer applications», namely: Data sharing, Program sharing, and

«Remote Service: Just a query need be sent if both the program and the data exist at a remote location. This will probably be the most common mode of operation until communication costs come down [since this] category includes most of the advantages of program and data sharing but requires less information to be transmitted between the computers.»74

On the other hand, electronic mail was explicitly ruled out as of no significance:

«Message Service: In addition to computational network activities, a network can be used to handle interpersonal message transmissions. This type of service can also be used for educational services and conference activities. However, it is not an important motivation for a network of scientific computers.»75

These motivations for developing the ARPANET were upheld and confirmed as the net began to be implemented:

«The goal of the computer network is for each computer to make every local resource available to any computer in the net in such a way that any program available to local users can be used remotely without degradation. That is, any program should be able to call on the resources of other computers much as it would call a subroutine.»76

Thus, as summarized by Ian Hardy, in his very informative history of the origins of network email, the primary motive was economic.

«ARPANET planners never considered email a viable network application. [They] focused on building a network for sharing the kinds of technical resources they believed computer researchers on interactive systems would find most useful for their work: programming libraries, research data, remote procedure calls, and unique software packages available only on specific systems.»77

After pioneering work on the underlying packet-switching architecture and protocols, the experimental ARPANET was launched in 1969, connecting measly four nodes.78 In the summer of 1971, when the network had expanded to fifteen nodes, a programmer named Ray Tomlinson at BBN (Bolt, Beranek, and Newman), devised a program for sending email over the network. He recalls that, while he was making improvements to a single-host email program (SNDMSG) for a new time-sharing operating system (TENEX) for the PDP-10 minicomputer, «the idea occurred to [him]» to combine SNDMSG with en experimental file-transfer protocol (CPYNET) to enable it to send a message across the network, from one host to another, and append it to the recipient’s MAILBOX file. For that purpose he also devised the address scheme NAME@HOST that has become standard.79 «The first message was sent between two machines that were literally side by side. The only physical connection they had (aside from the floor they sat on) was through the ARPANET», that is, through the local Interface Message Processor (IMP) that handled packet switching. To test the program, he sent a number of test messages to himself from one machine to the other. When he was satisfied that the program seemed to work, he sent a message to the rest of his group explaining how to send messages over the network: «The first use of network email announced its own existence»80. The program was subsequently made available to other sites on the net and was soon adapted to the IBM 360 and other host computers81.

An instant success within the tiny world of ARPANET programmers, this very first network email program triggered a chain reaction of innovation that within less than a couple of years resulted in the email designs we use today: a list of available messages indexed by subject and date, a uniform interface to the handling of sent and received mail, forwarding, reply, etc. — all as a result of programmers’ improving on a tool they used themselves. In 1977, an official ARPANET standard for electronic mail was adopted.82 The history of network email after that is well known. The technology migrated beyond the small community of technicians engaged in building computer networks to computer research in general and from there to the world of science and eventually to the world at large.

What is particularly remarkable in this story, and what also surprised those involved when they began to reflect on the experience, was «the unplanned, unanticipated and unsupported nature of its birth and early growth. It just happened, and its early history has seemed more like the discovery of a natural phenomenon than the deliberate development of new technology»83. In the same vein, the official ARPANET Completion Report notes that «The largest single surprise of the ARPANET program has been the incredible popularity and success of network mail» 84.

Network email was not only «unplanned» and «unanticipated»; it was «mostly unsupported»85, for, as noted already, the objective of the ARPANET was resource sharing. However, as Janet Abbate points out in her history of the emergence of the Internet, the aimed-for resource sharing failed to materialize. By 1971, when the original fifteen sites for which the net had been built were all connected, «most of the sites were only minimally involved in resource sharing» 86. She notes that the «hope that the ARPANET could substitute for local computer resources was in most cases not fulfilled», and adds that, as the 1970s progressed, «the demand for remote resources actually fell», simply because minicomputers «were much less expensive than paying for time on a large computer»87. The budding network technology represented by ARPANET was on the verge of being superseded and outcompeted by the proliferation of relatively inexpensive minicomputers. Thus, «Had the ARPANET’s only value been as a tool for resource sharing, the network might be remembered today as a minor failure rather than a spectacular success»88. But as it happened, email amounted to 75 percent of the traffic on the net as early as 1973.89 That is, as in the case of local email on time-sharing operating systems, network email came as an afterthought, devised by computer technicians for their own use, as a means for coordinating their cooperative effort of building, operating, and maintaining a large-scale construction, in this case the incipient Internet. Email was thrown together like the scaffolding for a new building, only to become a main feature, relegating the resulting building itself, which had been the original and official objective, to something close to a support structure.

This pattern — technicians building tools for use in their own laboratories — was to be repeated again and again in the development of collaborative computing, as evidenced by, for example, CSNET developed by Larry Landweber and others in 1979-81 to provide under-privileged computer scientists access to ARPANET as well as mail, directory services, news, announcements, and discussion on matters concerning computer science; USENET which was developed in 1979 by Jim Ellis and Tom Truscott as a news exchange network for Unix users without access to ARPANET;90 and the ARCHIE and GOPHER network file search protocols designed by Alan Emtage in 1989 and Mark P. McCahill in 1991, respectively.91

The case in point is of course the development of the World Wide Web (based on HTTP and HTML). It was initially developed by scientists at CERN for their own use, and the initial motive was almost identical with that of the Internet: access to resources across platforms. The technologies were themselves derived from previous technologies such as hypertext and markup languages. However, as with network email, when it arrived, the Web was soon adopted by others to be used in other contexts and for other purposes.92

Acknowledgments: The research reported in this article draws on research reported previously93 and has been supported by the Velux Foundation under the «Computational Artifacts» project.

- 1The mythology that computing technologies originated from a specific body of mathematical theory is common in accounts of the history of computer science. E.g., Martin Davis, The Universal Computer: The Road from Leibniz to Turing, New York 2000; George Dyson, Turing’s Cathedral: The Origins of the Digital Universe, London 2012.

- 2A fine collection of Mahoney’s papers, edited by Thomas Haigh, is now available: Michael S. Mahoney, Histories of Computing, Cambridge, London 2011.

- 3Michael S. Mahoney, Computers and mathematics. The search for a discipline of computer science, in: Javier Echeverría, Andoni Ibarra, Thomas Mormann (Ed.), The Space of Mathematics: Philosophical, Epistemological, and Historical Explorations, Berlin, New York 1992, 361.

- 4Michael S. Mahoney, The histories of computing(s), in: Interdisciplinary Science Reviews, Issue 2, 2005, 122.

- 5Mahoney, Computers and mathematics, 349.

- 6Cf. Gaspard C. F. M. Riche de Prony, Notice sur les Grandes Tables Logarithmique &c., in: Recueil des Discourses lus dans la seance publique de L’Academie Royale Des Sciences, Issue 7, 1824 (Translation entitled ‹On the great logarithmic and trigonometric tables, adapted to the new decimal metric system›, Science Museum Library, Babbage Archives, Document code: BAB U2l/l), excerpt probably copied at Charles Babbage’s request: https://sites.google.com/site/babbagedifferenceengine/barondepronysdescriptionoftheconstructi, last viewed 30 March 2015; Ivor Grattan-Guinness, Work for the hairdressers: The production of de Prony’s logartihmic and trigonometric tables, in: IEEE Annals of the History of Computing, Vol. 12, Issue 3, 1990, 177–185; Ivor Grattan-Guinness, The computation factory: de Prony’s project for making tables in the 1790s, in: Martin Campbell-Kelly, Mary Croarken, Raymond Flood, Eleanor Robson (Ed.), The History of Mathematical Tables: From Sumer to Spreadsheets, Oxford 2003 (Reprinted 2007), 105–122.

- 7David Alan Grier, When Computers Were Human, Princeton, Oxford 2005.

- 8Brian Jack Copeland, Colossus and the rise of the modern computer, in: Brian Jack Copeland (Ed.), Colossus: The Secrets of Bletchley Park’s Codebreaking Computers, Oxford 2006, 101–115.

- 9Alan M. Turing, Proposed electronic calculator, in: Brian Jack Copeland (Ed.), Alan Turing’s Automatic Computing Engine: The Master Codebreaker’s Struggle to Build the Modern Computer, Oxford 2005 (Written late 1945; submitted to the Executive Committee of the NPL February 1946 as ’Report by Dr. A. M. Turing on Proposals for the Development of an Automatic Computing Engine (ACE)’), 371.

- 10Martin Campbell-Kelly, William Aspray, Computer: A History of the Information Machine, New York 1996, 157.

- 11Cf. Judy Elizabeth O’Neill, The Evolution of Interactive Computing through Time-sharing and Networking, University of Minnesota 1992 (Ph.D. dissertation); Campbell-Kelly, Aspray, Computer: A History of the Information Machine; Kent C. Redmond, Thomas M. Smith, Project Whirlwind: The History of a Pioneer Computer, Bedford, Mass. 1980; Kent C. Redmond, Thomas M. Smith, From Whirlwind to MITRE: The R&D Story of the SAGE Airdefense Computer, Cambridge, Mass., London 2000. For a more vivid but also rather journalistic account, cf. Tom Green, Bright Boys, Natick, Mass. 2010.

- 12O’Neill, The Evolution of Interactive Computing, 15.

- 13Cf. Perry Orson Crawford, Application of digital computation involving continuous input and output variables (5 August 1946), in: Martin Campbell-Kelly, Michael R. Williams (Ed.), The Moore School Lectures: Theory and Techniques for Design of Electronic Digital Computers, Cambridge, Mass. 1985 (Revised ed. of Theory and Techniques for Design of Electronic Computers, 1947-1948), 374–392.

- 14The Whirlwind team had access to the then ongoing EDVAC design work. Herman H. Goldstine, The Computer from Pascal to von Neumann, Princeton, New Jersey 1972; Green, Bright Boys.

- 15Campbell-Kelly, Aspray, Computer: A History of the Information Machine, 167.

- 16O’Neill, The Evolution of Interactive Computing, 13.

- 17Redmond, Smith, Project Whirlwind, 217.

- 18Redmond, Smith, Project Whirlwind, 216.

- 19Ibid.

- 20Cf. O’Neill, The Evolution of Interactive Computing, 22.

- 21O’Neill, The Evolution of Interactive Computing, 22–23.

- 22Redmond, Smith, From Whirlwind to MITRE, 310f.

- 23Joseph Carl Robnett Licklider, Welden E. Clark, On-line man-computer communication, in: G. A. Barnard (Ed.), SJCC’62: Proceedings of the Spring Joint Computer Conference, 1-3 May 1962, Vol. 21, San Francisco, Cal. 1962, 113–128.

- 24Joseph Carl Robnett Licklider, Man-computer symbiosis (IRE Transactions on Human Factors in Electronics, March 1960), in: R. W. Taylor (Ed.), In Memoriam: J. C. R. Licklider, 1915-1990, Palo Alto, Cal. 1990, 4–11. (Also in Adele Goldberg, A History of Personal Workstations, Reading, Mass. 1988, 131–140).

- 25Douglas C. Engelbart, Augmenting human intellect: A conceptual framework (Prepared for: Director of Information Sciences, Air Force Office of Scientific Research, Washington 25, D.C., Contract AF49(638)-1024), Menlo Park, Cal. 1962.

- 26Ivan Edward Sutherland, Sketchpad: A man-machine graphical communication system, in E. C. Johnson (Ed.), SJCC’63: Proceedings of the Spring Joint Computer Conference, 21-23 May 1963, Vol. 23, Santa Monica, Cal. 1963, 329–346.

- 27O’Neill, The Evolution of Interactive Computing, 11.

- 28Cf. Martin Campbell-Kelly, From Airline Reservations to Sonic the Hedgehog: A History of Software Industry, Cambridge, Mass., London 2003, 39; Claude Baum, The System Builders: The Story of SDC, Santa Monica, Cal. 1981.

- 29O’Neill, The Evolution of Interactive Computing, 24.

- 30Fernando J. Corbató, Oral history interview, interviewed by A. L. Norberg, 18 April 1989, 14 November 1990, at Cambridge, Mass., University of Minnesota Digital Conservancy (Charles Babbage Institute), http://purl.umn.edu/107230, last viewed 30 March 2015, 14.

- 31O’Neill, The Evolution of Interactive Computing, 44.

- 32John McCarthy, Reminiscences on the history of time sharing (1983), Stanford University, http://www-formal.stanford.edu/jmc/history/timesharing/timesharing.html, last viewed 30 March 2015.

- 33Cf. Corbató, Oral history interview.

- 34Engelbart, Augmenting human intellect; cf. also Thierry Bardini, Bootstrapping: Douglas Engelbart, Coevolution, and the Origins of Personal Computing, Stanford, Cal. 2000.

- 35Cf. Douglas C. Engelbart, William K. English (1968), A research center for augmenting human intellect, in: FJCC’68: Proceedings of the Fall Joint Computing Conference, 9–11 December 1968, New York 1968, 395–410. Also in I. Greif (Ed.), Computer-Supported Cooperative Work: A Book of Readings, San Mateo, Cal. 1988, 81–105.

- 36A history of semiconductor technology can be found in the studies by Ernest Braun and Stuart Macdonald, Revolution in Miniature: The History and Impact of Semiconductor Electronics, Cambridge 1978; and by John Orton, Semiconductors and the Information Revolution: Magic Crystals that Made IT Happen, Amsterdam 2009. The transistor and integrated circuit technologies is recounted by the historians Michael Riordan and Lillian Hoddeson, Crystal Fire: The Invention of the Transistor and the Birth of the Information Age, New York, London 1997. – On the other hand, Bo Lojek offers a detailed and candid story of this development: Bo Lojek, History of Semiconductor Engineering, Berlin, Heidelberg 2007.

- 37Quoted in Tom R. Reid, The Chip: How Two Americans Invented the Microchip and Launched a Revolution, New York 2001 (2nd ed.; 1st ed., 1985), 16.

- 38Braun, Macdonald, Revolution in Miniature, 110.

- 39Cf. Bardini, Bootstrapping, 123, 251.

- 40Cf. Butler W. Lampson, Why Alto. XEROX Inter-Office Memorandum, Palo Alto, Cal. 1972, http://research.microsoft.com/en-us/um/people/blampson/38a-whyalto/Acrobat.pdf, last viewed 30 March 2015; Diana Merry, Ed McCreight, Bob Sproull, ALTO: A Personal Computer System Hardware Manual, Palo Alto, Cal. 1976 (Version 23; 1st Version, 1975); Charles P. Thacker, et al. (1979), Alto: A personal computer, CSL-79-11, Palo Alto, Cal. 1979 (Reprinted February 1984).

- 41Charles P. Thacker, Personal distributed computing: The Alto and Ethernet hardware, in: A. Goldberg (Ed.), A History of Personal Workstations, Reading, Mass. 1988, 267–289.

- 42Merry, McCreight, Sproull, ALTO: A Personal Computer System Hardware Manual, § 1.0.

- 43Thacker, et al., Alto: A personal computer, 3.

- 44Cf. David Canfield Smith, et al., Designing the Star User Interface, in: Byte, Vol. 7, 1982, 242–282; David Canfield Smith, Charles Irby, Ralph Kimball, Eric Harslem, The Star user interface: An overview, in: R. K. Brown, H. L. Morgan (Ed.), AFIPS’82: Proceedings of the National Computer Conference, 7–10 June 1982, Houston, Texas, Arlington, Virginia 1982, 515–528; Jeff Johnson, et al., The Xerox Star: A Retrospective, in: IEEE Computer, Vol. 22, Nr. 9, 1989, 11–26, 28–29.

- 45Smith, Irby, Kimball, Harslem, The Star user interface, 527.

- 46John M. Carroll, Introduction: The Kittle House Manifesto, in: John M. Carroll (Ed.), Designing Interaction: Psychology at the Human-Computer Interface, Cambridge 1991, 1.

- 47John M. Carroll, Wendy A. Kellogg, Mary Beth Rosson, The task-artifact cycle, in: John M. Carroll (Ed.), Designing Interaction: Psychology at the Human-Computer Interface, Cambridge 1991, 79.

- 48Ben Shneiderman, Direct manipulation: A step beyond programming languages, in: IEEE Computer, Vol. 16, Nr. 8, 1983, 57–69.

- 49Edwin L. Hutchins, James D. Hollan, Donald A. Norman, Direct manipulation interfaces, in: D. A. Norman, S. W. Draper (Ed.), User Centered System Design, New Jersey 1986, 87–124.

- 50Smith, Irby, Kimball, Harslem, The Star user interface, 523.

- 51Cf. Ole-Johan Dahl, Kristen Nygaard, SIMULA: An ALGOL-based simulation language, in: Communications of the ACM, Vol. 9, Nr. 9, 1966, 671–678; Kristen Nygaard, Ole-Johan Dahl, The development of the SIMULA languages, in: ACM SIGPLAN Notices, Vol. 13, Nr. 8, 1978, 245–272.

- 52Smith, Irby, Kimball, Harslem, The Star user interface, 523.

- 53Smith, Irby, Kimball, Harslem, The Star user interface, 525.

- 54Andy Hertzfeld, Revolution in the Valley, Sebastopol, Cal. 2005, xvii f.

- 55A obvious exception … Bonnie Nardi and her colleagues around 1990. Bonnie A. Nardi, James R. Miller, An ethnographic study of distributed problem solving in spreadsheet development, in: T. Bikson, F. Halasz (Ed.), CSCW’90: Proceedings of the Conference on Computer-Supported Cooperative Work, 7–10 October 1990, Los Angeles, Cal., New York 1990, 197–208; Bonnie A. Nardi, James R. Miller, The spreadsheet interface: A basis for end user programming, in: INTERACT ’90: Proceedings of the IFIP TC13 Third Interational Conference on Human-Computer Interaction, Amsterdam, North-Holland 1990, 977–983; Bonnie A. Nardi, James R. Miller, Twinkling lights and nested loops: distributed problem solving and spreadsheet development, in: International Journal of Man-Machine Studies, Vol. 34, 1991, 161–184; Michelle Gantt, Bonnie A. Nardi, Gardeners and gurus: patterns of cooperation among CAD users, in: P. Bauersfeld, et al. (Ed.), CHI’92 Conference Proceedings: ACM Conference on Human Factors in Computing Systems, 3-7 May 1992, Monterey, Cal., New York 1992, 107–117. See also Chengzhi Peng, Survey of Collaborative Drawing Tools. Design Perspectives and Prototypes, in: Computer Supported Cooperative Work (CSCW): An International Journal, Vol. 1, Nr. 3, 1993, 197–228; Yvonne Rogers, Coordinating computer-mediated work, in: Computer Supported Cooperative Work (CSCW): An International Journal, Vol. 1, Nr. 4, 1993, 295–315.

- 56For example, Mary Beth Rosson, John M. Carroll, Usability Engineering: Scenario-Based Development of Human-Computer Interaction, San Francisco 2001, 128.

- 57But for reliable accounts, cf. Campbell-Kelly, From Airline Reservations to Sonic the Hedgehog; Paul Freiberger, Michael Swaine, Fire in the Valley: The Making of the Personal Computer, New York 2000 (2nd ed.; 1st ed. 1984), 289–291.

- 58Daniel Singer Bricklin, Robert M. Frankston, Oral history interview, interviewed by M. Campbell-Kelly and P. E. Ceruzzi, 7 May 2004, University of Minnesota (Charles Babbage Institute) 2004 (OH 402), 12f.

- 59Cf. Hans J. Nissen, Peter Damerow, Robert K. Englund, Archaic Bookkeeping: Early Writing and Techniques of Economic Administration in the Ancient Near East, Chicago, London 1993 (Transl. from Frühe Schrift und Techniken der Wirtschaftsverwaltung im alten Vorderen Orient: Informationsspeicherung und -verarbeitung vor 5000 Jahren, Verlag Franz Beck 1990, Transl. by P. Larsen); Eleanor Robson, Tables and tabular formatting in Sumer, Babylonia, and Assyria, 2500 BCE – 50 CE, in: M. Campbell-Kelly, et al. (Ed.), The History of Mathematical Tables: From Sumer to Spreadsheets, Oxford 2003 (Reprinted 2007), 19–48; Jack Goody, The Domestication of the Savage Mind, Cambridge 1977.

- 60Paul Brainerd, Oral History of Paul Brainerd, Interviewed by S. Crocker, 16 May 2006, Interview transcript, Mountain View, Cal. 2006 (X2941.2005), 4f.

- 61Cf. Robert W. Weisberg, Problem solving and creativity, in: R. J. Sternberg (Ed.), The Nature of Creativity: Contemporary Psychological Perspectives, Cambridge 1988, 148–176.

- 62David E. Weisberg, The Engineering Design Revolution: The People, Companies and Computer Systems that Changed Forever the Practice of Engineering, Englewood, Col. 2008, http://www.cadhistory.net, last viewed 30 March 2015, 2:16.

- 63Cf. Chester W. Edwards, Overlay Drafting Systems, New York 1984; Frank Woods, John Powell, Overlay Drafting: A Primer for the Building Design Team, London 1987.

- 64Mark J. Clayton, Computational design and AutoCAD: Reading software as oral history, in: SIGraDi 2005: IX Congreso Iberoamericano de Gráfica Digital, 21–23 November 2005, Lima 2005, 105.

- 65Charles M. Eastman, Why we are here and where we are going: The evolution of CAD, in: ACADIA 1989: New Ideas and Directions for the 1990’s. ACADIA Conference Proceedings, 27–29 October 1989, Gainsville, Flor., §2.

- 66Ibid.

- 67Cf. Peng, Survey of Collaborative Drawing Tools.

- 68Cf. Kjeld Schmidt, Ina Wagner, Ordering systems: Coordinative practices and artifacts in architectural design and planning, in: Computer Supported Cooperative Work (CSCW): The Journal of Collaborative Computing, Vol. 13, Nr. 5–6, 2004, 349–408.

- 69E.g. AIA, AIA CAD Layer Guidelines: U.S. National CAD Standard Version 3, Washington, D.C. 2005 (3rd ed.; 1st ed. 1990).

- 70Tom Van Vleck, The history of electronic mail, 1 February 2001, http://www.multicians.org/thvv/mail-history.html, last viewed 30 March 2015.

- 71Ibid.

- 72Ibid.

- 73Licklider, Man-computer symbiosis, 8.

- 74Lawrence G. Roberts, Multiple computer networks and intercomputer communication, in: J. Gosden, B. Randell (Ed.), SOSP’67: Proceedings of the First ACM Symposium on Operating System Principles, New York 1967, 1.

- 75Ibid.

- 76Lawrence G. Roberts, Barry D. Wessler, Computer network development to achieve resource sharing, in: H. L. Cooke (Ed.), SJCC’70: Proceedings of the Spring Joint Computer Conference, Vol. 36, Montvale, New Jersey 1970, 543.

- 77Ian R. Hardy, The Evolution of ARPANET email. History thesis paper, Berkeley, Cal. 1996, http://www.ifla.org.sg/documents/internet/hari1.txt, last viewed 30 March 2015, 6.

- 78Much of the pioneering work on packet switching was done by Donald Davies and his colleagues at the British National Physical Laboratory. Cf. Donald Watts Davies: Proposal for the development of a national communication service for on-line data processing, Manuscript, Teddington (National Physical Laboratory) 15 December 1965, http://www.cs.utexas.edu/users/chris/DIGITAL_ARCHIVE/NPL/DaviesLetter.html, last viewed 30 March 2015; Davies, Donald Watts: Proposal for a Digital Communication Network, Teddington (National Physical Laboratory) June 1966, http://www.dcs.gla.ac.uk/~wpc/grcs/Davies05.pdf, last viewed 30 March 2015.

- 79Cf. Hardy, The Evolution of ARPANET email; Raymond S. Tomlinson, The first network email, 2001, http://openmap.bbn.com/~tomlinso/ray/firstemailframe.html, last viewed 30 March 2015.

- 80Tomlinson, The first network email.

- 81Peter H. Salus, Casting the Net: From ARPANET to Internet and Beyond, Reading, Mass. 1995, 95.

- 82Cf. David H. Crocker, John J. Vittal, Kenneth T. Pogran, D. Austin Henderson, Jr., Standard for the format of ARPA network text messages, 21 November 1977, http://www.rfc-editor.org/rfc/rfc733.txt, last viewed 30 March 2015.

- 83Theodore H. Myer, David Dodds (1976), Notes on the development of message technology, in: D. M. Austin (Ed.), Berkeley Workshop on Distributed Data Management and Computer Networks, Berkley, Cal. 1976 (LBL-5315), 145.

- 84Frank Heart, Alexander A. McKenzie, John McQuillan, David Walden, ARPANET Completion Report, Bolt, Beranek and Newman library, 4 January 1978. (Also published as A History of the ARPANET: The First Decade, BBN 1981, Report #4799.), http://www.cs.utexas.edu/users/chris/DIGITAL_ARCHIVE/ARPANET/DARPA4799.pdf, p. III-110.

- 85Frank Heart, Alexander A. McKenzie, John McQuillan, David Walden, Draft ARPANET Completion Report, [Bound computer printout, unpublished], Bolt Beranek and Newman, 9 September 1977 (Quoted from Janet Ellen Abbate, Inventing the Internet, Cambridge, Mass., London 1999), III–67; Abbate, Inventing the Internet, 109.

- 86Abbate, Inventing the Internet, 78.

- 87Abbate, Inventing the Internet, 104f.

- 88Abbate, Inventing the Internet, 104–106.

- 89Cf. Katie Hafner, Matthew Lyon, Where Wizards Stay up Late: The Origins of the Internet, London 2003 (2nd ed.; 1st ed., 1996), 189, 194.

- 90Cf. John S. Quarterman, The Matrix: Computer Networks and Conferencing Systems Worldwide, Bedford, Mass. 1990, 235–251; Michael Hauben, Ronda Hauben, Netizens: On the History and Impact of Usenet and the Internet, Los Alamitos, Cal. 1997.

- 91Cf. James Gillies, Robert Cailliau, How the Web was Born: The Story of the World Wide Web, Oxford 2000.

- 92Cf. ibid.